The development of weather radar has been a game-changer in meteorology, particularly in tracking and forecasting severe weather events. Before its advent, natural disasters like the 1900 Galveston hurricane and the 1925 tri-state tornado often caused immense damage due to the lack of advance warning and effective monitoring. While early airborne remote sensing techniques offered some storm monitoring capabilities, they were limited by visibility and cost constraints.

The breakthrough came with the invention of radar technology in the 1930s, initially developed for military applications in World War II. Radar, which stands for “RAdio Detection And Ranging,” works by broadcasting radio waves and measuring the energy reflected back to the receiver. This technology proved effective in penetrating thick cloud cover, which is opaque to visible light but transparent to radio waves. Unlike aerosol particles in clouds, larger raindrops, sleet, and snowflakes reflect radio waves, allowing radar systems to detect and track them.

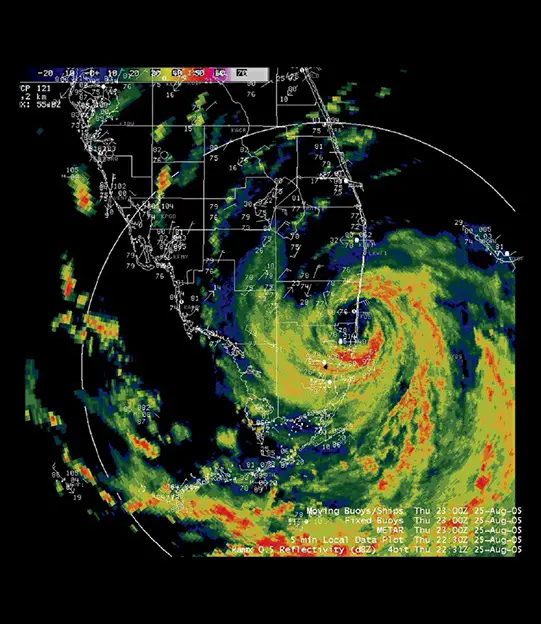

After World War II, the first commercial weather radar station was established in 1947 in Washington, DC, under the US Weather Bureau (now the National Weather Service). This marked the beginning of a rapidly expanding network of radar stations across the United States and eventually around the world. These stations have significantly improved the forecasting and monitoring of extreme weather conditions, playing a crucial role in aviation, shipping, and academic research related to weather patterns.